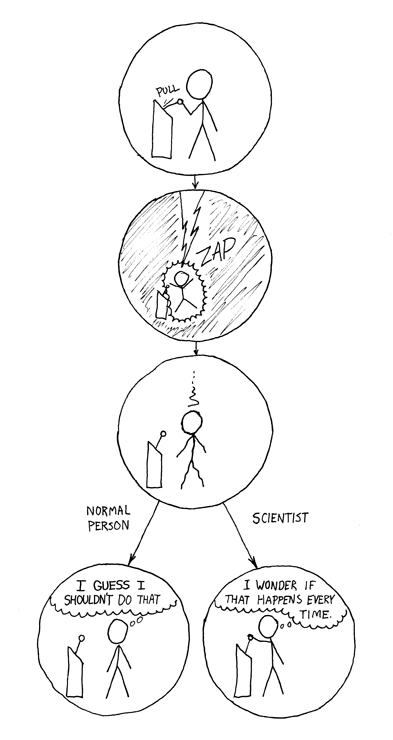

xkcd.com // CC 2.5

I certainly believe that scientific research is important. Research uncovers new knowledge and prunes away facts that are not accurate. However, in our society, research is also a coinage to justify views of reality. A Biblical scholar might invoke a sentence from the Bible before holding forth on his own interpretation or opinions. In a similar manner, a scientific study might be cited or a scientist quoted to justify that something is real before jumping off into one’s own thoughts, opinions, theories, or justifications. If a scientific result can be invoked, we can believe that something is true. Is there an unconscious? Freud said so, but he’s out of date. Are we intrinsically social beings? Evolutionary theorists argue. Does meditation really result in an altered state of consciousness? If I present results from research, preferably using a high tech measurement like a brain scan, or if I can come up with a theory that uses words like “neural nets” or “neurotransmitters,” then I can believe all of these things.

What’s wrong with this? Isn’t this science doing its job of uncovering truth? There are two things wrong with this. One is that not all knowledge is scientific knowledge. The second is that scientific results are often portrayed inaccurately in our society.

With regard to the first point, I’ll just give a few examples. von Bertalanffy, a systems theory scientist, wrote that even a physicist will chase his (sic) hat when the wind blows it without knowing the mathematics determining which way the hat will blow. Einstein famously said that not everything that was important could be measured, and not everything that could be measured was important.

But what I really want to talk about here is the second point. We are inundated with scientific results in newspapers, websites, and other places. Most often, a brief summary of research is followed by broad generalizations about what the research means. However, the outcome of research is not simple facts. Experiments are complicated things that must be evaluated by readers and understood in context. When I was a graduate student in psychology, every class included practice in critiquing research.

To understand research, certain mathematical ideas are important. “Statistical significance” is important to both accurate interpretation of research and to inaccurate or misleading reports. If you’ll bear with me, I’ll run through what I mean. Suppose you have a coin. If you toss the coin 100 times, it will come up heads about 50 times, not exactly 50 but close. Why? That’s just the way the world we live in works, there are laws of probability. Since there are two possible outcomes—heads or tails—each will come up about half the time. If I toss my coin 100 times and it always comes up heads, I’ll probably conclude the coin is biased. Why? Because it just doesn’t happen; it’s extremely improbable, in the world we live in, that an honest coin would do this.

What if the coin came up heads 60 times? Is the coin honest or not? The question is this: When is an outcome still “what you would expect by chance even though the numbers are not exactly alike (since we expect approximately 50 heads, not exactly 50)”? On the other hand, when is the difference big enough that you would conclude that the coin is probably biased? Sometimes it’s hard to tell. In research, very often results are in the “hard to tell” category. For example, if 55 percent of the women in my research prefer chocolate ice cream, while 65 percent of the men prefer chocolate, is there a real sex difference (it’s so improbable there’s a real difference) or is there not (the numbers seem different, but I’m not sure whether this is just because there is a range due to chance and not a real difference). Sometimes numbers that seem very different are actually what you could commonly get by chance, and sometimes numbers that don’t seem very different are very improbable. In addition, what I’m studying may produce a weak rather than a larger, obvious effect because among us humans, for all kinds of psychological, social, and biological research, what is being studied is only one factor contributing to a situation and not the only thing going on. In the example, even if men and women do have different likelihoods of preferring chocolate, there are many possible reasons for a person’s choices—diabetes, city you grew up in, getting rejected by a date while you were eating chocolate ice cream, etc.

Enter tests of statistical significance. These are mathematical procedures which assess how likely an outcome is to have occurred by chance if there was no real underlying difference. If my statistical test revealed that the difference in the percentages of men and women who prefer chocolate ice cream could have occurred purely by chance only one time out of a thousand, I would conclude that my results were in the “there probably is a sex difference” category. Researchers have an arbitrary convention: If results could have happened by chance 5% of the time or less, then the results are considered evidence of a real difference and are said to be “statistically significant.”

When media reports state that results are “significant,” very often they mean “statistically significant.” However, statistically significant only means “unlikely to have occurred by chance.” How important a result is is a completely different question. For example, suppose that I was studying a medication that worked about 70% of the time; a sugar pill didn’t work. Suppose this was extremely unlikely to have occurred by chance; that is, the results were statistically significant, and I, therefore, had evidence that the pill was having an effect. If we’re talking about a cancer medication given to people who would otherwise die, but now 70% didn’t, this would be a powerful effect. I would be happy and excited. Suppose instead that the medication was a weight loss pill, and 70% of the people using it lost 5 pounds after 18 months while people given a placebo sugar pill (or perhaps a sugar substitute) didn’t. Even though this result was also statistically significant—I have evidence that those folks wouldn’t have lost the 5 pounds without the pill—the amount of weight lost was so small that I wouldn’t be happy and excited that I had found a new, important weight loss pill.

The research that Chris Hitchcock discusses in her March 13 re:Cycling post is a good example. Women were best at picking out a picture with a snake during the days immediately before their menstrual period. The results were extremely statistically significant—many of the results would have occurred by chance less than one time in ten thousand—and were reported in the media. However, what these results mean and how important they are in affecting behavior are separate questions. Chris discusses the results—the response was faster by 1/5 of a second. She also discusses the theoretical implications the authors choose to draw—that women are responding to anxiety and fear, and that this has something to do with human evolution and PMS. However, does a tiny change in reaction time indicate a meaningful change in anxiety level or the ability to detect danger? Are the changes in reaction time necessarily due to anxiety? For example, the subjects were assigned to experimental groups based on phase of their cycle. Does this mean that they knew the research was about menstruation? If so, this could have influenced their behavior.

There are other important points to being a canny consumer of research reports. As when buying a used car, or even a new car, you can get a really good vehicle, but it’s a good idea to be knowledgeable before making a purchase. Let the buyer beware.

Good topic, Paula. The term “statistical significance” was an unfortunate choice of jargon which is very easy to manipulate. People who are interested in being better at evaluating health evidence might also want to check out this 2005 report: Just the Facts, Ma’am: A Women’s Guide for Understanding Evidence about Health and Health Care (available on-line at https://www.womenandhealthcarereform.ca/publications/evidenceen.pdf).

The authors also talk about issues of bias in the very production of knowledge. I believe that the majority of health research is industry funded, which means that it is directed at questions of commercial value. This leads to a structural bias in the types of evidence that are available, which we also need to keep in mind when looking at evidence.

Thanks for the reference, Chris. And for your point about industry funding and structural bias.

Statistical significance aside, there are so numerous methodological flaws in this study, that their conclusion is likely invalid.

First, the authors the authors state that “…from this experiment, in which no blood samples were collected to verify cycle phase or to correlate hormone levels to behavioral effects”. They cannot conclude that there is a hormonal basis for changes in detection of the snake without first confirming what the hormonal milieu of the participants was at the time of the experiment. Instead they relied on retrospective data, confirming that cycles during the experiment were between 27 and 29 days long. This does not confirm anything about the hormonal status of the participants and does not account for variations from the norm which include late ovulations and shortened luteal phase or anovulatory cycles, all of which would cause significant variation in hormones throughout the cycle and could complete invalidate the statement that women were, in particular, in their follicular phase.

Second, the authors make a leap from hormonal status to PMS, calling these changes in visual detection a “rudimentary form of PMS”. A woman in her premenstrual phase is not akin to a woman with premenstrual syndrome. Premenstrual syndrome is poorly defined and has well over 100 documented symptoms and no established cause, although several mechanisms have been proposed.

Third, the authors write that “Here, we reasoned that if heightened anxiety was experienced during the luteal phase of most healthy women, it would be reflected in the performance of visual search as the enhanced detection of snake images as targets…”. The authors do nothing to prove that the participants were in a state of anxiety at the time of the experiment, again invalidating the conclusion that it is anxiety which changes visual detection of the snake.

They go on to suggest that this change is related to “increased progesterone and estradiol levels” however, these hormones are on the decline during the premenstrual phase, as compared to their levels during the mid-luteal phase. They need to define “increased” in relation to something else and they need to provide hard data on each woman’s individual levels of hormones before they can come to any such conclusion.

It is very likely that both males and females undergo changes in cognitive ability or visual acuity with fluctuations in hormone levels, but to draw links between hormone levels and snake detection without ever confirming hormone levels is not a conclusion one can make. To suggest that better detection of threats during the premenstrual phase is based on a syndrome characterized by anxiety without proving the participants have that syndrome or were even experiencing anxiety is also not borne out by this research.

Great critique of the research, Megan. The researchers should take note.

And Chris, thanks for the link. I’m going to read “Just the Facts Ma’am” for sure.

Megan–

Thanks for the critique. An additional issue this brings up is peer review: Shouldn’t all of the methodological issues you bring up, as well as others that exist, have been picked up in peer review before the journal accepted the paper for publication?

Agreed — you are right Paula: great review by Megan, and peer reviewer/s should have picked many of the issues up in the study before accepting it for publishing. Scientific Reports is published by the Nature Publishing Group, who say Scientific Reports uses a “streamlined peer-review system, all papers are rapidly and fairly peer reviewed to ensure they are technically sound.” Perhaps in this particular case, the review process went too fast, and or the individual/s who reviewed the article had an expertise in a different area than endocrine changes in a female fertility cycle?